Memory that can be shared between threads is called shared memory or heap memory. The term variable as used in this section refers to both fields and array elements [JLS 05]. Variables that are shared between threads are referred to as shared variables. All instance fields, static fields, and array elements are shared variables and are stored in heap memory. Local variables, formal method parameters, and exception handler parameters are never shared between threads and are unaffected by the memory model.

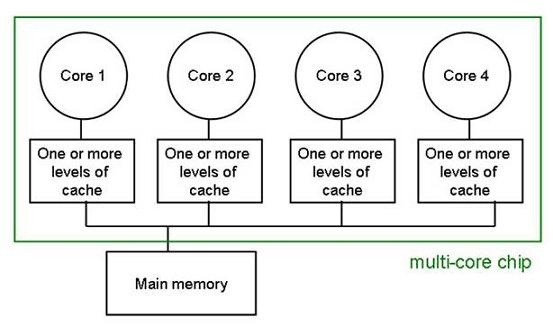

In modern shared-memory multiprocessor architectures, each processor has one or more levels of cache that are periodically reconciled with main memory as shown in the following figure:

The visibility of writes to shared variables can be problematic because the value of a shared variable may be cached; writing its value to main memory may be delayed. Consequently, another thread may read a stale value of the variable.

A further concern is not only that concurrent executions of code are typically interleaved, but also that statements may be reordered by the compiler or runtime system to optimize performance. This results in execution orders that are difficult to discern by examination of the source code. Failure to account for possible reorderings is a common source of data races.

Consider the following example in which a and b are (shared) global variables or instance fields, but r1 and r2 are local variables that are inaccessible to other threads.

Initially, let a = 0 and b = 0.

|

|

|---|---|

|

|

|

|

In Thread 1, the two assignments a = 10; and r1 = b; are unrelated, so the compiler or runtime system is free to reorder them. The two assignments in Thread 2 may also be freely reordered. Although it may seem counter-intuitive, the Java memory model allows a read to see the value of a write that occurs later in the apparent execution order.

A possible execution order showing actual assignments is:

Execution Order (Time) |

Thread# |

Assignment |

Assigned Value |

Notes |

|---|---|---|---|---|

1. |

t1 |

|

10 |

|

2. |

t2 |

|

20 |

|

3. |

t1 |

|

0 |

Reads initial value of |

4. |

t2 |

|

0 |

Reads initial value of |

In this ordering, r1 and r2 read the original values of the variables b and a respectively, even though they are expected to see the updated values, 20 and 10. Another possible execution order showing actual assignments is:

Execution Order (Time) |

Thread# |

Statement |

Assigned Value |

Notes |

|---|---|---|---|---|

1. |

t1 |

|

20 |

Reads later value (in step 4.) of write, that is 20 |

2. |

t2 |

|

10 |

Reads later value (in step 3.) of write, that is 10 |

3. |

t1 |

|

10 |

|

4. |

t2 |

|

20 |

|

In this ordering, r1 and r2 read the values of a and b written from step 3 and 4, even before the statements corresponding to these steps have executed.

Restricting the set of possible reorderings makes it easier to reason about the correctness of the code.

Even when statements execute in the order of their appearance in a thread, caching can prevent the latest values from being reflected in the main memory.

The Java Language Specification defines the Java Memory Model (JMM), which provides certain guarantees to the Java programmer. The JMM is specified in terms of actions, including variable reads and writes, monitor locks and unlocks, and thread starts and joins. The JMM defines a partial ordering called happens-before on all actions within the program. To guarantee that a thread executing action B can see the results of action A, for example, there must be a happens-before relationship defined such that A happens-before B.

According to section 17.4.5 "Happens-before Order" of the Java Language Specification [JLS 05]:

- An unlock on a monitor happens-before every subsequent lock on that monitor.

- A write to a volatile field happens-before every subsequent read of that field.

- A call to

start()on a thread happens-before any actions in the started thread.- All actions in a thread happen-before any other thread successfully returns from a

join()on that thread.- The default initialization of any object happens-before any other actions (other than default-writes) of a program.

- A thread calling interrupt on another thread happens-before the interrupted thread detects the interrupt

- The end of a constructor for an object happens-before the start of the finalizer for that object

When two operations lack a happens-before relationship, the Java Virtual Machine (JVM) is free to reorder them. A data race occurs when a variable is written to by at least one thread and read by at least another thread, and the reads and writes lack a happens-before relationship. A correctly synchronized program is one that lacks data races. The Java Memory Model (JMM) guarantees sequential consistency for correctly synchronized programs. Sequential consistency means that the result of any execution is the same as if the reads and writes on shared data by all threads were executed in some sequential order, and the operations of each individual thread appear in this sequence in the order specified by its program [Tanenbaum 03]. In other words:

- Take the read and write operations performed by each thread and put them in the order the thread executes them (thread order)

- Interleave the operations in some way allowed by the happens-before relationships to form an execution order (program order)

- Read operations must return most recently written data in the total program order for the execution to be sequentially consistent

- Implies all threads see the same total ordering of reads and writes of shared variables

The actual execution order of instructions and memory accesses can vary as long as the actions of the thread appear to that thread as if program order were followed, and provided all values read are allowed for by the memory model. This allows the programmer to understand the semantics of the programs they write, and allows compiler writers and virtual machine implementors to perform various optimizations [JPL 06].

There are several concurrency primitives that can help a programmer reason about the semantics of multithreaded programs.

The volatile Keyword

Declaring shared variables as volatile ensures visibility and limits reordering of accesses. Volatile accesses lack a guarantee of the atomicity of composite operations such as incrementing a variable. Consequently, use of volatile is insufficient for cases where the atomicity of composite operations must be guaranteed (see VNA02-J. Ensure that compound operations on shared variables are atomic for more information).

Declaring variables as volatile establishes a happens-before relationship such that a write to a volatile variable is always seen by threads performing subsequent reads of the same variable. Statements that occur before the write to the volatile field also happen-before any reads of the volatile field.

Consider two threads that are executing some statements:

Thread 1 and Thread 2 have a happens-before relationship such that Thread 2 cannot start before Thread 1 finishes.

In this example, Statement 3 writes to a volatile variable, and statement 4 (in Thread 2) reads the same volatile variable. The read sees the most recent write (to the same variable v) from statement 3.

Volatile read and write operations cannot be reordered either with respect to each other or with respect to non-volatile variable accesses. When Thread 2 reads the volatile variable, it sees the results of all the writes occurring before the write to the volatile variable in Thread 1. Because of the relatively strong guarantees of volatile, the performance overhead of volatile is almost the same as that of synchronization.

The previous example lacks a guarantee that statements 1 and 2 will be executed in the order in which they appear in the program. They may be freely reordered by the compiler because of the absence of a happens-before relationship between these two statements.

The possible reorderings between volatile and non-volatile variables are summarized in the matrix shown below. Load and store operations are synonymous with read and write operations, respectively. [Lea 08]

Note that the visibility and ordering guarantees provided by the volatile keyword apply specifically to the variable itself, that is, they apply only to primitive fields and object references. Programmers commonly use imprecise terminology, and speak about "member objects." For the purposes of these guarantees, the actual member is the object reference itself; the objects referred to by volatile object references (the "referents", hereafter) are beyond the scope of the guarantees. Consequently, declaring an object reference to be volatile is insufficient to guarantee that changes to the members of the referent are visible. That is, a thread

may fail to observe a recent write from another thread to a member field of such a referent. Furthermore, when the referent is mutable and lacks thread-safety, other threads might see a partially-constructed object or an object in a (temporarily) inconsistent state [Goetz 2007]. However, when the referent is immutable, declaring the reference volatile suffices to guarantee visibility of the members of the referent.

Synchronization

A correctly synchronized program is one whose sequentially consistent executions lack data races. The example shown below uses a non-volatile variable x and a volatile variable y. It is incorrectly synchronized.

Thread 1 |

Thread 2 |

|---|---|

x = 1 |

r1 = y |

y = 2 |

r2 = x |

There are two sequentially consistent execution orders of this example:

Step (Time) |

Thread# |

Statement |

Comment |

|---|---|---|---|

1. |

t1 |

x = 1 |

Write to non-volatile variable |

2. |

t1 |

y = 2 |

Write to volatile variable |

3. |

t2 |

r1 = y |

Read of volatile variable |

4. |

t2 |

r2 = x |

Read of non-volatile variable |

and,

Step (Time) |

Thread# |

Statement |

Comment |

|---|---|---|---|

1. |

t2 |

r1 = y |

Read of volatile variable |

2. |

t2 |

r2 = x |

Read of non-volatile variable |

3. |

t1 |

x = 1 |

Write to non-volatile variable |

4. |

t1 |

y = 2 |

Write to volatile variable |

In the first case, there is a happen-before relationship between actions such that steps 1 and 2 always occur before steps 3 and 4. However, the second case lacks a happens-before relationship between any of the steps. Consequently, because there is a sequentially consistent execution that lacks a happens-before relationship, this example contains data races.

Correct visibility guarantees that multiple threads accessing shared data can view each others' results, but fails to establish the order in which each thread reads or writes the data. Correct synchronization both provides correct visibility and also guarantees that threads access data in a proper order. For example, the code shown below ensures that there is only one sequentially consistent execution order that performs all the actions of thread 1 before thread 2.

class Assign {

public synchronized void doSomething() {

Thread t1 = new Thread() {

public void run() {

// Perform Thread 1 actions

x = 1;

y = 2;

}

};

Thread t2 = new Thread() {

public void run() {

// Perform Thread 1 actions

r1 = y;

r2 = x;

}

};

t1.start();

t1.join();

t2.start();

}

}

When using synchronization, it is unnecessary to declare the variable y as volatile. Synchronization involves acquiring a lock, performing operations, and then releasing the lock. In the above example, the doSomething() method acquires the intrinsic lock of the class object (Assign). This example can also be written to use block synchronization:

class Assign {

public void doSomething() {

synchronized (this) {

// ... rest of doSomething() body ...

}

}

}

The intrinsic lock used in both examples is the same.

The java.util.concurrent Classes

Atomic Classes

Volatile variables are useful for guaranteeing visibility. However, they are insufficient for ensuring atomicity. Synchronization fills this gap but incurs overheads of context switching and frequently causes lock contention. The atomic classes of package java.util.concurrent.atomic provide a mechanism for reducing contention in most practical environments while at the same time ensuring atomicity. According to Goetz and colleagues [Goetz 06]:

With low to moderate contention, atomics offer better scalability; with high contention, locks offer better contention avoidance.

The atomic classes consist of implementations that exploit the design of modern processors by exposing commonly needed functionality to the programmer. For example, the AtomicInteger.incrementAndGet() method can be used for atomically incrementing a variable. The compare-and-swap instruction(s) provided by modern processors offer more fine-grained control and can be used directly by invoking high-level methods such as java.util.concurrent.atomic.Atomic*.compareAndSet() where the asterisk can be, for example, an Integer, Long or Boolean.

The Executor Framework

The java.util.concurrent package provides the Executor framework which offers a mechanism for executing tasks concurrently. A task is a logical unit of work encapsulated by a class that implements Runnable or Callable. The Executor framework allows task submission to be decoupled from low level scheduling and thread management details. It provides the thread pool mechanism that allows a system to degrade gracefully when presented with more requests than the system can handle simultaneously.

The Executor interface is the core interface of the framework and is extended by the ExecutorService interface that provides facilities for thread pool termination and obtaining return values of tasks (Futures). The ExecutorService interface is further extended by the ScheduledExecutorService interface that provides a way to run tasks periodically or after some delay. The Executors class provides several factory and utility methods that are pre-configured with commonly used configurations of Executor, ExecutorService and other related interfaces. For example, the Executors.newFixedThreadPool() method returns a fixed size thread pool with an upper limit on the number of concurrently executing tasks, and maintains an unbounded queue for holding tasks while the thread pool is full. The base (actual) implementation of the thread pool is provided by the ThreadPoolExecutor class. This class can be instantiated to customize the task execution policy.

The java.util.concurrent utilities are preferred over traditional synchronization primitives such as synchronization and volatile variables because the java.util.concurrent utilities abstract the underlying details, provide a cleaner and less error-prone API, are easier to scale, and can be enforced using policies.

Explicit Locking

The java.util.concurrent package provides the ReentrantLock class that has additional features that are missing from intrinsic locks. For example, the ReentrantLock.tryLock() method returns immediately when another thread is already holding the lock. Acquiring and releasing a ReentrantLock has the same semantics as acquiring and releasing an intrinsic lock.

6 Comments

Masaki Kubo

The following code needs more work although I could sense the intention of the code from the comments. All four assignments will just be executed regardless of Thread 1 or Thread 2.

class Assign { public synchronized void doSomething() { // Perform Thread 1 actions x = 1; y = 2; // Perform Thread 2 actions r1 = y; r2 = x; } }David Svoboda

True. We reasoned that doing the necessary threading overhead would have bloated the code too much. My current hunch is to scrap the code and just make a thread table. Something like:

Suppose a function creates two threads that do the following:

Thread 1

Thread 2

x = 1

r1 = y

y = 2

r2 = x

Would this be clearer?

David Svoboda

Oops, I just saw that my thread table is already in the text.

I've changed the code to do exactly what the text says. Comments?

Masaki Kubo

David, the first code example seems unable to guarantee the following sentence because we are not certain which thread terminates first.

So, how about changing the code to something like:

Yozo TODA

wow, the code rendering looks broken.

the proposed code adds

t1.join()to ensure thread1 is completed before thread2:t1.start(); try { t1.join(); } catch (InterruptedException ie) { // perform exception handling } t2.start();or,

try { t1.start(); t1.join(); t2.start(); } catch (InterruptedException ie) { // perform exception handling }David Svoboda

Fixed